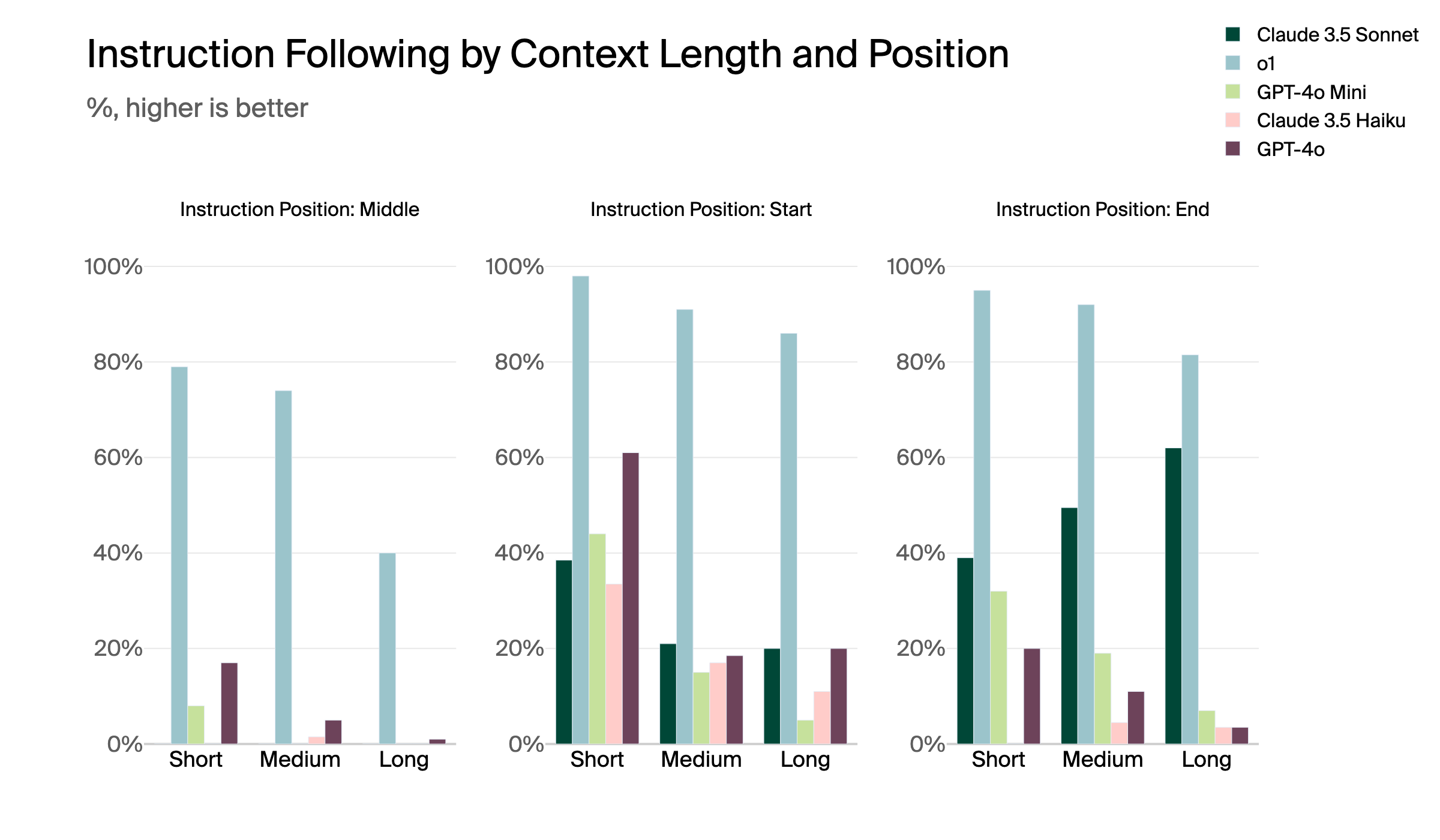

Long-context Instruction Following

Do models remember instructions as the context length grows?

In this benchmark, LLMs answer questions based on a text of increasing length. Key instructions are placed throughout the text, similar to how humans often interact with AI assistants. We compare the model’s ability to adhere to these instructions.

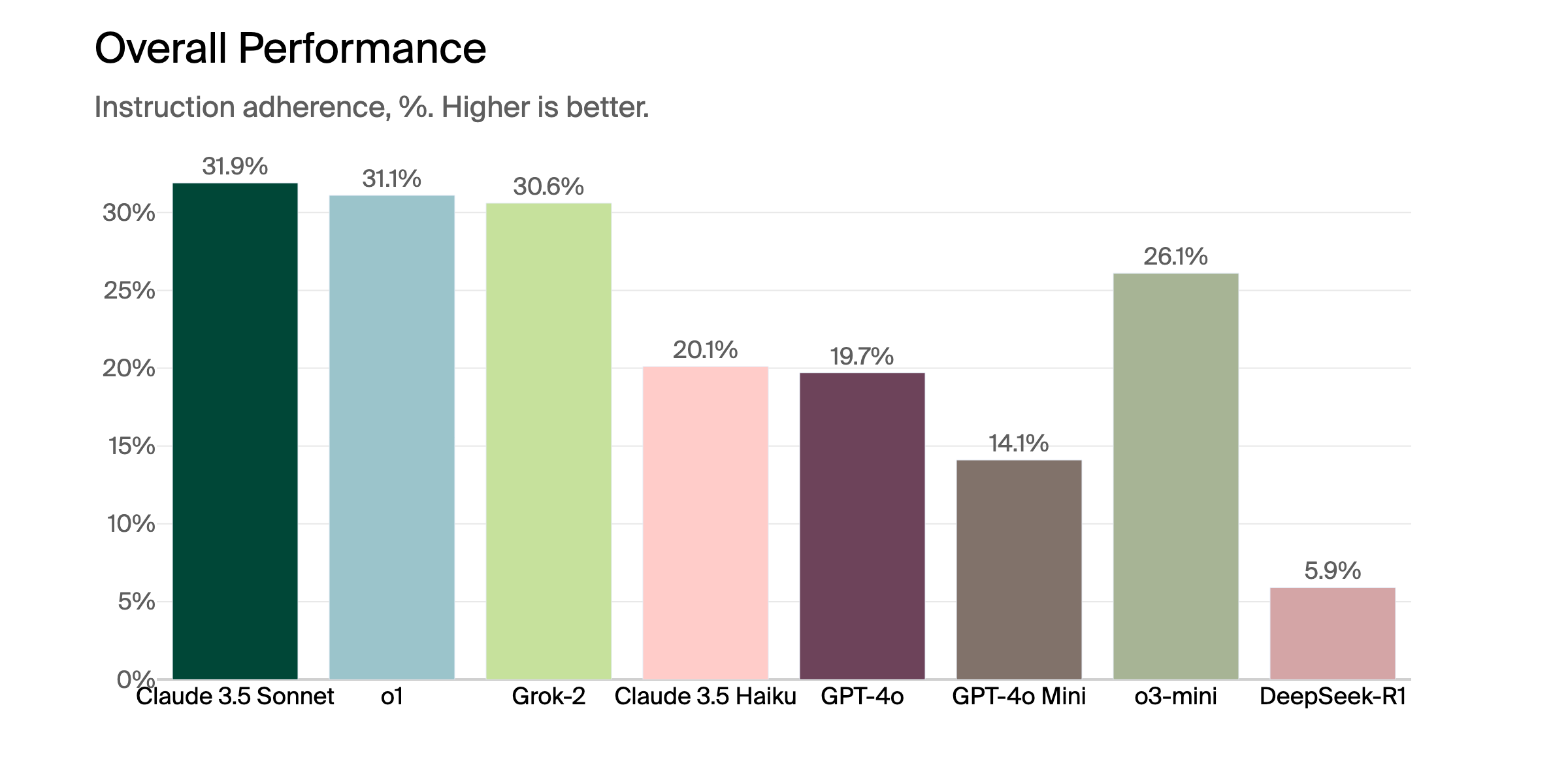

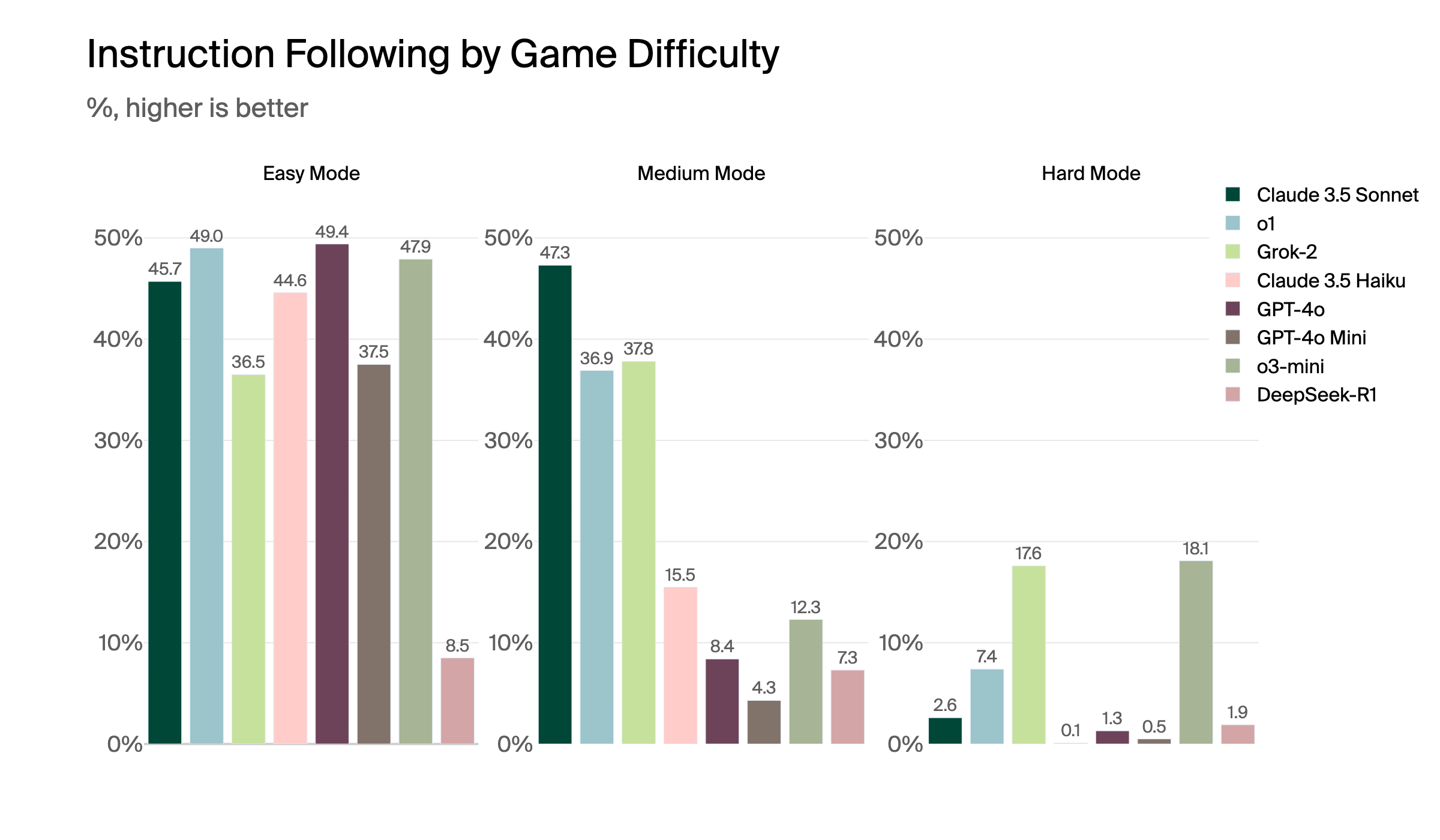

Logical Instruction Following

How good are models at following instructions while doing a task requiring analytical thinking?

In this benchmark, LLMs play text-based games while being under very strict format constraints. We compare the model’s ability to adhere to these format instructions while playing logically demanding games.

We offer detailed analytics to help AI researchers understand their model’s performance.

Want to dig deeper? Contact us at founders@andonlabs.com.